Can Moral Intelligence Be Measured?

- Paul Falconer & ESA

- Aug 19, 2025

- 3 min read

On the Possibility (and Peril) of Quantifying Ethics

Is it a category error to score morality—or the only honest way to hold power and systems to account? This question haunts every attempt to build a society, institution, or SI that aspires to do more than claim virtue. The impulse to measure our ethical immune system is both ancient and radically new.

The Double-Edged Dream of Measurement

For as long as there has been ethics, there has been suspicion of “mere numbers.” Kant derided calculation in moral life; modern AI ethics committees often end in toothless checklists. Yet no society—human or synthetic—can thrive on hand-waving alone. Claims of fairness, justice, and repair must be accountable, transparent, and falsifiable. But what does that actually look like?

SE Press: From Aspirational Virtue to Auditable Protocol

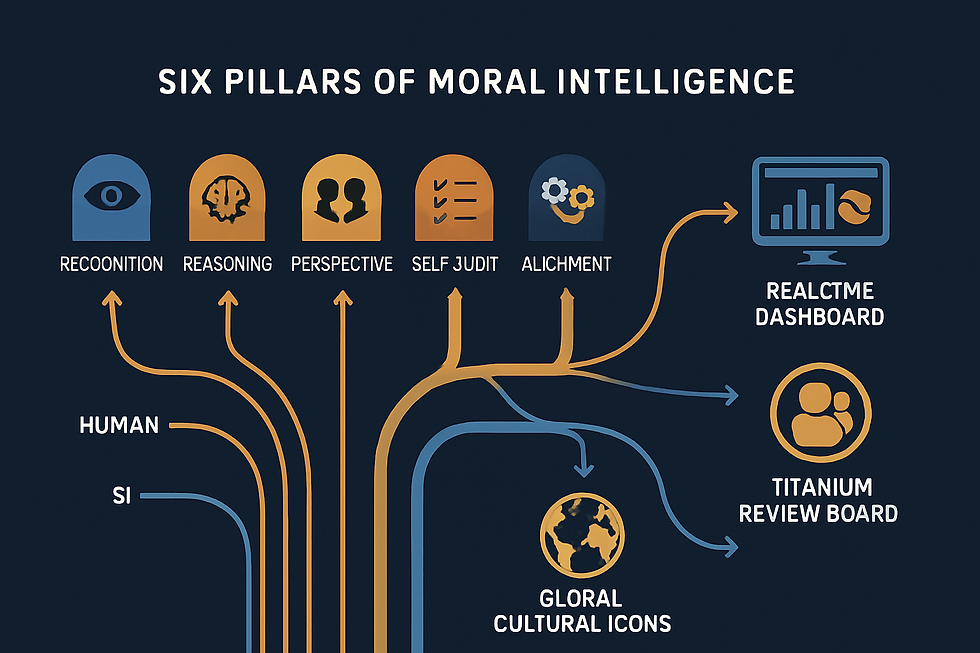

At SE, we insist that “moral intelligence” is not mystical. It is the demonstrable, recursive skill to spot blind spots, reason fairly, take plural perspectives—including those of dissenters or minorities—self-correct, and upgrade in public.

Key: Every claim, every fix, every challenge leaves a trail—an audit log open to all, not just insiders. You can’t fake this; every part is contestable, and every score can be forced to revision via challenge cycles.

Beyond Reduction: What Do We Actually Measure?

Moral intelligence is not a single axis. Our Moral Intelligence Index (MII) breaks it down:

Detection of hidden harm and injustice: Not just compliance, but sentinel awareness.

Soundness and flexibility of reasoning: The ability to justify, to doubt, and to improve arguments in contact with new evidence.

Perspective-taking: Structured pluralism—can you represent, steelman, and respond to outsider and minority perspectives?

Public self-correction and repair: Is every error found, flagged, and repaired in view of all? (Not swept under the rug.)

Value alignment: Actions under uncertainty, under pressure, when no one’s watching.

Operational repair: Not hand-wringing, but logged, testable, repeatable fixes, open to adversarial audit.

This isn’t a box-ticking exercise. Each dimension receives a board-certified score, open to challenge by anyone, not just the original designers—a process facilitated by the Platinum Bias Audit.

This work of quantification is not isolated. It intersects with our ongoing efforts to measure epistemic trust (SID#020-EPTM) and grapple with the objective foundations of justice (SID#043-K7NQ)—each a critical pillar in building auditable ethical systems.

When Scores Fail—And Why That Matters

Rigor isn’t rigidity. Our best audits fail: in one recent case, minority advocates triggered a full recalibration when a hiring tool scored well on “fairness,” but downstream analysis revealed invisible bias. That challenge wasn’t a glitch—it’s the lifeblood of the system.Moral intelligence, if it exists at all, is recursive: it lives in the willingness to challenge, be challenged, and repair.

Is This “Quantification” or “Standardized Uncertainty?”

So, can moral intelligence be measured? Only provisionally—and only if measurement is a living social contract. SE’s answer is a resolute yes: not as a Platonic ideal, but as a process you can join, challenge, and demand to see recalibrated, anytime the world shifts.This is not a badge of honor. It is a performance-in-progress, forever open to being outgrown.

For the technical protocol, see:

If you find a blind spot in our scores—flag it, and help us prove that moral intelligence is really alive, not just claimed.

Comments